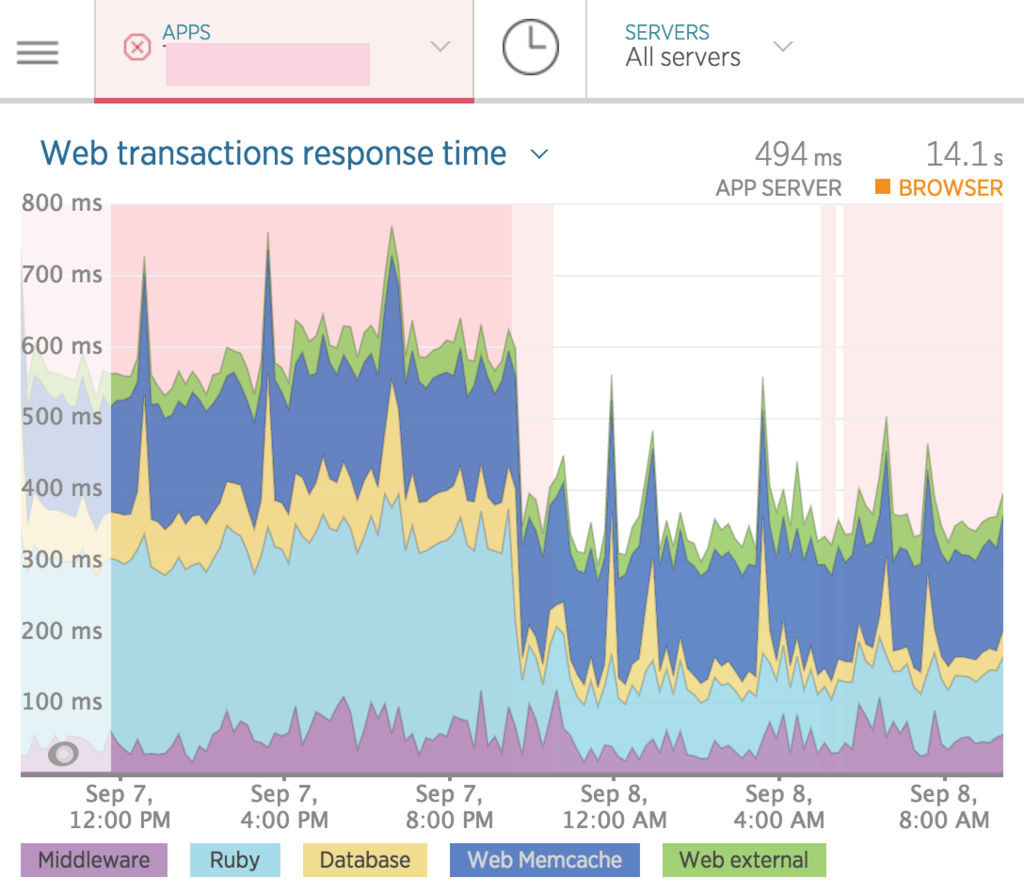

The Original Problem:

The client reported general poor performance on the Ruby on Rails website. The original developers were gone and unavailable, along with the knowledge of the site, other than the repository. A Ruby/Rails consultant suggested the performace was possibly related to using older versions of Passenger and/or Ruby.

Initial Investigation:

- No obvious smoking gun

- Database performance looked reasonable

- No clear commonality between 3 slowest transactions

- Some transactions running 'long'

- The sietmap transaction was running 10+ seconds on the back-end

Going After Low-Hanging Fruit

Diving into the sitemap transaction, there was this snippet from the rails server log:

Cache write: views/127.0.0.1:3000/sitemap.xml ({:expires_in=>21600 seconds})

Value for TheSite:views/127.0.0.1:3000/sitemap.xml over max size: 1048576 <= 3237483 So the sitemap exceeds the maximum item size allowed for caching. There is a simple parameter to increase the max item size for memcached. However at this point I don't know what other applications or services are using this memcache instance, nor what implications imcreasing the item limit might have on them.

The application uses the Dalli as it's client for memcache. I decided to investigate using Dalli's compression instead, thereby avoiding reconfiguring the production memcache server. In brief:

- Memcache default max item size is 1MB

- Unknown what other systems use production memcache server

- Unkown implications of increasing max size from 1MB to +3MB

- Using dalli compression potentially allows leaving max size at 1MB

- At the smaller risk the compression otherwise potentially hurts performance

- Mitigated by only compressing items larger than current limit of 1MB

The One-Liner

config/production.rb

- config.cache_store = :dalli_store, 'xx.xx.xx.xx', { namespace: 'TheSite' }

+ config.cache_store = :dalli_store, 'xx.xx.xx.xx', { namespace: 'TheSite', compress: true, compression_min_size: 1048575 } Testing the fix

From the memcache log, with the 1-line fix, showing that the result can be stored now that compression is working, fitting well under the 1MB item limit:

SET TheSite:views/127.0.0.1:3000/sitemap.xml Value len is 460312

SET TheSite:all_tags Value len is 220248 From the rails server log, writing and reading from the cache succesfully:

Cache write: views/127.0.0.1:3000/sitemap.xml ({:expires_in=>21600 seconds})

method=GET path=/sitemap.xml format=xml controller=refinery/sitemap action=index

status=200 duration=13673.90 view=10225.80 db=946.16 time=2017-07-05 16:16:54 -0700

Cache read: http://127.0.0.1:3000/sitemap.xml?

Cache read: views/127.0.0.1:3000/sitemap.xml ({:expires_in=>21600 seconds})

method=GET path=/sitemap.xml format=xml controller=refinery/sitemap action=index

status=200 duration=13.55 view=0.00 db=0.00 time=2017-07-05 16:18:07 -0700 The Big Payoff

As it turns out there were other items cached that blew out the max size limit. At least one of the items was used in the majority of site's transactions. The end result was an immediate overall gain in site performance, with a large reduction in average server-side processing time.